What is AI Ethics and Why is it Important?

Table of Contents

- Jaro Education

- 19, August 2024

- 1:00 pm

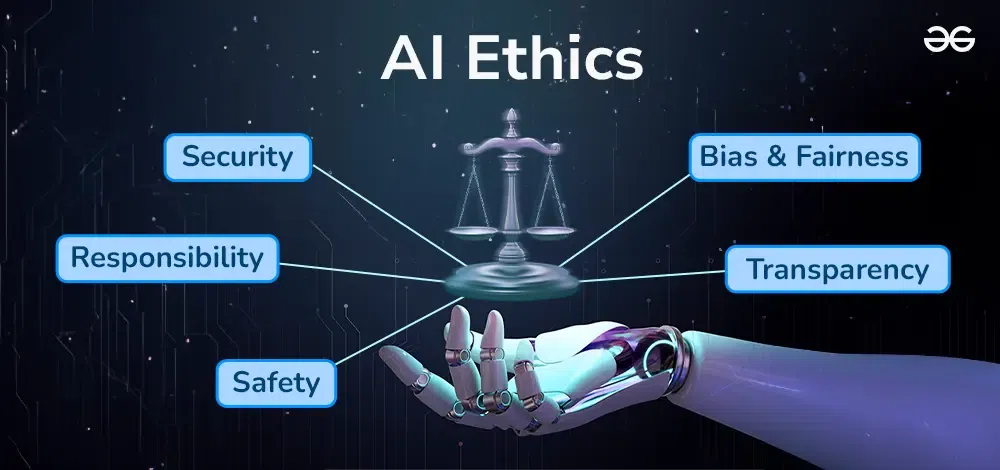

AI Ethics refers to the principles and guidelines that govern the development, deployment, and use of artificial intelligence (AI) technologies. It encompasses ethical considerations aimed at ensuring AI systems are designed and implemented in a responsible and socially beneficial manner.

Ethical considerations are crucial in AI development to address potential risks and ensure that AI technologies benefit society as a whole. Issues such as fairness, transparency, accountability, privacy protection, and bias mitigation play pivotal roles in shaping AI ethics.

The Post Graduate Certificate Programme in Applied Data Science & AI by IIT Roorkee equips participants with comprehensive knowledge and skills in data science and AI. It emphasizes AI ethics, preparing professionals to navigate complex ethical challenges in AI development and deployment.

Historical Background and Emergence of AI Ethics

The emergence of AI Ethics as a distinct field can be traced back to the rapid advancements in artificial intelligence technologies over recent decades. As AI systems have become more sophisticated and pervasive across various sectors—from healthcare and finance to transportation and entertainment—concerns have grown regarding their ethical implications. These concerns are rooted in the potential societal impacts of AI technologies, ranging from job displacement and privacy violations to bias in decision-making processes.

The field of AI Ethics has evolved as a response to these challenges, aiming to ensure that AI technologies are developed, deployed, and managed in a manner that aligns with ethical standards and societal values. The ethical discourse around AI is shaped by philosophical inquiries, legal considerations, and practical implications of AI applications in everyday life.

Key Ethical Principles in AI Development

Ethical principles serve as foundational guidelines for the responsible development and deployment of AI technologies. These principles are designed to mitigate potential harms and maximize the societal benefits of AI systems. Some of the key ethical principles in AI development include:

- Fairness: Ensuring that AI systems treat all individuals fairly and without bias, particularly in decision-making processes related to hiring, lending, and criminal justice.

- Transparency: Making AI systems transparent and understandable to users and stakeholders, disclosing how decisions are made and data is used.

- Accountability: Holding individuals, organizations, and AI systems accountable for their actions, ensuring mechanisms are in place to address errors, biases, or misuse.

- Privacy Protection: Safeguarding individuals’ privacy rights and ensuring that AI systems handle personal data responsibly and securely.

- Bias Mitigation: Identifying and mitigating biases in AI algorithms and datasets to prevent discriminatory outcomes across different demographic groups.

These principles guide ethical AI design and implementation, promoting trust and reliability in AI technologies.

Case Studies Illustrating Ethical Challenges in AI Applications

Real-world examples highlight the complex ethical dilemmas that arise from AI applications:

- Algorithmic Bias in Hiring: AI-powered recruitment tools have been criticized for perpetuating biases based on gender, race, or socioeconomic status, potentially excluding qualified candidates unfairly.

- Privacy Violations in Surveillance: Surveillance technologies equipped with facial recognition and behavioral analysis algorithms raise concerns about privacy infringement and mass surveillance without consent.

- Accountability Issues in Autonomous Vehicles: Ethical considerations arise in autonomous vehicle technology, particularly regarding decisions made by AI systems in critical situations that involve potential harm to passengers, pedestrians, or other drivers.

These case studies underscore the importance of AI ethics in guiding the development and deployment of AI technologies. They highlight the need for continuous dialogue among policymakers, researchers, industry leaders, and the public to address ethical challenges and ensure AI advances benefit society as a whole.

Ethical Concerns in AI

As artificial intelligence (AI) continues to permeate various aspects of our lives, ethical concerns surrounding its development, deployment, and impact have become increasingly prominent. These concerns revolve around ensuring fairness, transparency, accountability, and the responsible use of AI technologies in ways that benefit society while minimizing risks and ethical dilemmas. Understanding and addressing these ethical considerations are crucial for harnessing the full potential of AI to improve human lives and drive sustainable progress.

*GeeksforGeeks

Bias and Fairness in AI Algorithms

- Identifying Bias: Conducting thorough analyses of datasets and algorithms to detect biases in data collection, labeling, and processing stages.

- Mitigating Bias: Implementing techniques such as dataset diversification, bias-aware algorithms, and fairness-aware machine learning models to reduce and mitigate biases.

- Fairness Metrics: Developing and applying fairness metrics (e.g., disparate impact, equalized odds) to quantitatively evaluate and ensure fairness in AI applications.

Ensuring fairness in AI algorithms is crucial for promoting equitable outcomes and preventing the perpetuation of social biases in decision-making processes.

Privacy Concerns and Data Protection

AI systems heavily rely on vast amounts of personal data to train models and make predictions, raising significant concerns about privacy and data protection. Key considerations include:

- Data Collection and Consent: Implementing transparent data collection practices and obtaining informed consent from individuals for the use of their data.

- Data Anonymization: Employing techniques such as anonymization, pseudonymization, and encryption to protect individual privacy and prevent the identification of sensitive information.

- Regulatory Compliance: Adhering to stringent data protection regulations (e.g., GDPR, CCPA) and industry standards to ensure responsible data handling practices.

Effective data protection measures are essential for maintaining user trust and safeguarding individuals’ rights in AI-driven applications.

Accountability and Responsibility in AI Decision-Making

AI systems increasingly make autonomous decisions that impact various sectors, including healthcare, finance, and criminal justice. Ensuring accountability and responsibility involves several critical strategies:

- Transparent Decision-Making: Providing clear explanations and transparency regarding AI decisions to stakeholders and affected individuals.

- Algorithmic Audits: Conducting regular audits and assessments of AI systems to monitor their performance, detect biases, and ensure compliance with ethical standards.

- Legal and Ethical Frameworks: Establishing robust guidelines, codes of conduct, and regulatory frameworks that hold developers, deployers, and users accountable for AI outcomes.

- Human Oversight: Integrating human oversight and intervention in AI decision-making processes to mitigate risks, address ethical considerations, and ensure decisions align with societal values.

Promoting accountability and responsibility in AI decision-making is crucial for building trust, addressing potential harms, and maximizing the societal benefits of AI technologies.

Regulatory Landscape and Guidelines

Governments, organizations, and industry bodies around the world are grappling with how best to regulate AI technologies to ensure they are developed and deployed ethically and responsibly. This section explores the current global regulatory frameworks, industry guidelines, and the collaborative efforts shaping AI ethics policies. Understanding these frameworks is essential for balancing innovation with societal concerns and ensuring AI benefits are maximized while risks are mitigated.

Overview of Global Regulatory Frameworks

The regulatory landscape for AI ethics is evolving globally, with frameworks such as the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States setting stringent standards for data protection and privacy:

- GDPR: Enforced since May 2018, GDPR mandates transparent data processing, explicit consent for data use, and the right to be forgotten, significantly impacting how AI systems handle personal data within the EU and beyond.

- CCPA: Effective since January 2020, CCPA grants California residents rights to know, delete, and opt-out of the sale of their personal information, influencing AI applications that process Californian user data.

Industry Guidelines and Best Practices for Ethical AI Development

Industry bodies and organizations have developed guidelines and best practices to promote responsible AI development:

- IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems: Offers a framework for embedding ethical considerations into AI systems, focusing on transparency, accountability, and societal benefit.

- AI Ethics Guidelines by OECD: Provides principles for trustworthy AI, emphasizing human-centric values, fairness, and accountability in AI design and deployment.

Role of Governments, Organizations, and Academia in Shaping AI Ethics Policies

Governments, international organizations, and academia play pivotal roles in shaping AI ethics policies and standards:

- Government Regulation: Legislators worldwide are drafting AI-specific regulations to address ethical concerns of AI, ensure public safety, and foster innovation while balancing risks and benefits.

- Industry Initiatives: Tech giants and industry leaders collaborate on ethical AI initiatives, such as Google’s AI Principles and Microsoft’s AI for Good program, to promote ethical guidelines and standards.

- Academic Research: Universities and research institutions contribute to AI ethics through interdisciplinary studies, exploring ethical implications, societal impacts, and governance frameworks.

Benefits of Ethical AI for Society and Businesses

AI ethics offer substantial benefits to society and businesses:

- Enhanced Trust: AI ethics fosters trust among users, consumers, and stakeholders by promoting fairness, transparency, and accountability in AI systems. When users understand how AI algorithms work and perceive them as fair and transparent, they are more likely to trust and engage with AI-driven technologies.

- Social Impact: AI ethics contribute to societal well-being by addressing biases, protecting privacy, and ensuring inclusivity in AI-driven applications. For instance, in healthcare diagnostics, ethical AI algorithms ensure that diagnostic recommendations are unbiased and considerate of diverse patient demographics, thereby improving healthcare outcomes for all.

- Business Reputation: Companies adhering to ethical AI principles enhance their brand reputation. They attract ethical-conscious consumers who prioritize privacy, fairness, and responsible data use. Moreover, businesses mitigate regulatory risks associated with non-compliance by proactively implementing ethical guidelines and demonstrating commitment to ethical AI practices.

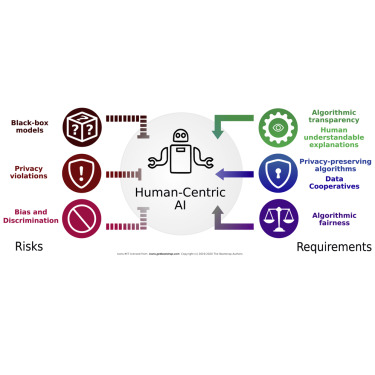

Risks and Consequences of Unethical AI Practices

Unethical AI practices pose significant risks and consequences:

- Bias and Discrimination: Biased AI algorithms perpetuate societal inequalities by producing discriminatory outcomes based on race, gender, or socioeconomic status. For example, biased hiring algorithms may inadvertently favor certain demographic groups over others, leading to unfair hiring practices and perpetuating existing social biases.

- Privacy Breaches: Inadequate data protection measures in AI systems can lead to privacy breaches, unauthorized data access, and misuse of personal information. Ethical AI practices prioritize robust data security measures to safeguard user privacy and prevent unauthorized access or exploitation of sensitive personal data.

- Regulatory Penalties: Non-compliance with AI ethics regulations may result in legal sanctions, fines, and reputational damage for organizations deploying AI technologies. Regulations such as the GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act) mandate strict guidelines for data handling and user consent, imposing penalties for violations that compromise user privacy or fail to ensure fair and transparent AI practices.

*ScienceDirect

Ethical Considerations in AI Research and Development

Ethical considerations guide AI researchers and developers in responsible innovation:

- Human-Centered Design: Ethical AI prioritizes human well-being and societal benefit in AI development. It ensures that AI systems align with ethical values, respect human rights, and contribute positively to society. Human-centered design approaches involve stakeholders, including end-users and affected communities, in the design process to ensure AI solutions meet real-world needs ethically.

- Ethical AI Impact Assessment: Conducting ethical impact assessments helps evaluate potential risks, societal implications, and ethical dilemmas associated with AI applications. These assessments identify and address ethical concerns early in the development process, ensuring that AI technologies are deployed responsibly and mitigate unintended negative consequences.

- Continual Monitoring and Adaptation: Regularly monitoring AI systems post-deployment is essential for adapting to emerging ethical challenges and updating ethical guidelines. Continual monitoring allows organizations to respond to evolving societal expectations, technological advancements, and regulatory requirements, ensuring that AI systems maintain ethical standards throughout their lifecycle.

Emerging Trends and Challenges in AI Ethics

Ethical Considerations in AI-Driven Autonomous Systems

- Trend: Advances in autonomous systems, such as self-driving cars and unmanned aerial vehicles, raise profound ethical questions. These systems must make split-second decisions that can impact human safety and well-being.

- Challenge: Balancing safety imperatives with ethical decision-making frameworks is crucial. Ethicists and engineers are working to develop AI algorithms that prioritize human life, adhere to legal standards, and respect societal values.

AI in Healthcare and Biotechnology

- Trend: AI is revolutionizing healthcare by enhancing diagnostic accuracy, enabling personalized treatment plans, and accelerating drug discovery. However, these advancements bring ethical dilemmas concerning patient privacy, consent, and data security.

- Challenge: Safeguarding patient autonomy and ensuring the ethical use of medical data are paramount. Ethicists collaborate with healthcare professionals to establish guidelines that uphold patient rights, mitigate risks of data misuse, and ensure equitable access to AI-driven healthcare solutions.

Algorithmic Bias Mitigation

- Trend: Efforts to mitigate bias in AI algorithms are gaining momentum. Researchers and developers are striving to eliminate biases rooted in historical data, ensure fairness across diverse populations, and promote algorithmic transparency.

- Challenge: Achieving algorithmic fairness requires robust data collection practices, diverse and inclusive datasets, and continuous algorithm auditing. Ethicists advocate for transparency in AI systems to identify and rectify biases, thereby fostering trust and minimizing unintended discriminatory impacts.

Predictions for the Future of AI Ethics Frameworks and Guidelines

Global Harmonization of AI Ethics Standards

- Prediction: There will be a concerted effort to establish unified global standards for the development and deployment of AI ethics. International collaboration among governments, industry leaders, and ethical experts will drive the adoption of universal guidelines.

- Impact: Harmonized standards can facilitate cross-border AI applications, promote interoperability among diverse regulatory frameworks, and enhance public trust in AI technologies worldwide

Enhanced Transparency and Accountability

- Prediction: Organizations will increasingly prioritize transparency and accountability in AI systems. They will implement mechanisms for explaining AI decisions, disclosing data usage practices, and facilitating user control over personal information.

- Impact: Transparent AI systems build user confidence, mitigate concerns about algorithmic opacity, and empower individuals to make informed choices. Accountability frameworks ensure that AI developers and users are held responsible for ethical lapses and unintended consequences, fostering a culture of ethical stewardship in AI innovation.

The Role of Education and Training in Promoting Ethical AI Practices

Curriculum Integration

- Importance: Educational institutions play a pivotal role in preparing future AI developers, engineers, and policymakers to navigate complex ethical challenges. Integrating ethics into AI and data science curricula ensures that students develop a deep understanding of ethical principles and their application in technological innovation.

- Initiatives: By fostering ethical awareness and critical thinking, educational initiatives equip students to contribute responsibly to AI development and deployment. Programmes like the Post Graduate Certificate Programme in Applied Data Science & AI at IIT Roorkee exemplify efforts to educate aspiring AI professionals on ethical considerations.

Continuing Professional Development

- Significance: Continuous learning and professional development are essential for keeping pace with evolving AI ethics frameworks and best practices. Industry professionals benefit from training programs that address emerging ethical challenges, promote ethical decision-making in AI projects, and encourage collaboration across disciplines.

- Impact: Investing in ongoing education ensures that AI stakeholders remain at the forefront of ethical innovation. By updating skills and knowledge, professionals contribute to ethical advancements in AI technology and advocate for ethical principles in organizational practices.

Conclusion

AI ethics are integral to harnessing the potential of AI technologies for societal benefit. From ensuring fairness and transparency in algorithmic decision-making to safeguarding privacy and promoting accountability, AI ethics uphold public trust and mitigate risks.

Stakeholders—whether developers, policymakers, educators, or users—have a collective responsibility to prioritize ethical AI practices. By fostering transparency, advocating for inclusive AI development, and supporting international cooperation on AI ethics standards, we can shape a future where AI enhances human welfare ethically and sustainably.

3 thoughts on “What is AI Ethics and Why is it Important?”

Your blog is a true hidden gem on the internet. Your thoughtful analysis and engaging writing style set you apart from the crowd. Keep up the excellent work!

Thank you for your kind words! Your thoughts are greatly appeciated.

Thank you for your generous comment! It’s great to hear that the post was valuable to you.