Complete Guide on Over Fitting and Under Fitting in Machine Learning

Table of Contents

- jaro education

- 24, November 2023

- 4:00 pm

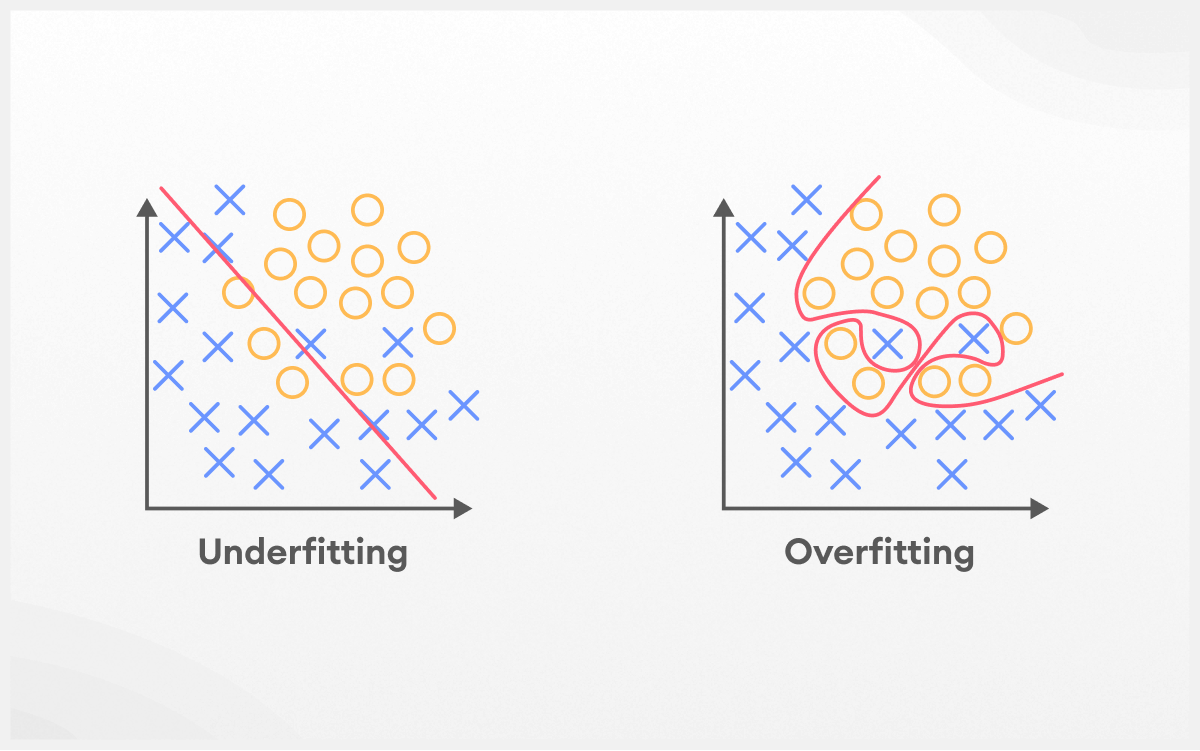

Overfitting and underfitting in machine learning are the two critical concepts and primary reasons for a model’s poor performance. When a machine learning model performs decently for training data but performs poorly with new data, it is called overfitting. On the other hand, underfitting in machine learning happens when a model has not learned the training data patterns and cannot generalise well on the new data, which generally occurs due to high bias and low variance.

While creating a machine learning model, coders and developers can apply different algorithms to find the best fit. To acquire these coding skills, Manipal University Jaipur is offering an Online MCA Programme. It focuses on transcending advanced technology leaders in the changing digital landscape. Candidates will get an immersive learning experience from this course, along with career counselling and placement assistance. To know more about this programme, get in touch with Jaro Education.

How to Identify Overfitting and Underfitting in Machine Learning?

While creating a model, engineers may face problems of overfitting and underfitting in machine learning. For that purpose, the following techniques can be used to detect those problems.

Overfitting - How to Detect it?

-

-

- Users can withhold a validation set. Once a model has been trained on the training set, it may be evaluated on the validation dataset, and the accuracy of the model in the training and validation datasets can be compared. A significant difference between these two outcomes suggests that they have an overfitted model.

- Another method for detecting overfitting is to begin with a simple model that will act as a benchmark. If you test more sophisticated algorithms with this method, you will have a broad notion of whether the added complexity of the model is useful or not. This is known as the Occam’s razor test. This concept implies that all else being equal, simpler solutions to issues are favoured over more complicated ones (if your model does not improve greatly after employing a much more sophisticated model, a simpler model is advantageous).

- To measure model accuracy, engineers can use a resampling strategy. K-fold cross-validation is the most often used resampling approach. It enables users to train and test the model k-times on different subsets of training data to assess machine learning model performance on unknown data. The disadvantage is that it takes time and cannot be used for sophisticated models such as neural networks with deep connections.

-

Underfitting- How to Detect it?

-

- If engineers draw a graph with the data points and the fitted curve and the classifier curve are overly simplistic, their model is most likely underfitting. In such circumstances, a more complicated model should be tested.

- If the model is underfitting, the amount of data lost for training and validation will be significant. In other words, the training and validation errors will be large for an underfitting dataset.

Ways to Avoid Overfitting

Overfitting is a fairly prevalent problem in Machine Learning, and there has been an enormous amount of research dedicated to researching techniques to prevent overfitting. Here are the basic ways to avoid overfitting situations.

L1/L2 regularisation

It is a strategy for preventing any network from learning an overly complicated model and, hence reducing the chances of overfitting. Users can add a penalty term to the cost function in L1 or L2 regularisation to drive the predicted coefficients towards zero (rather than taking more extreme values). Weights can decay towards zero but not to zero with L2 regularisation, but weights can decay to zero with L1 regularisation.

Dropout

Dropout is a form of regularisation that avoids a subset of units of the network with a set probability. Professionals may use dropout to limit correlated learning between units, which may have resulted in overfitting. However, with dropout, you would require additional periods for the model to converge.

Data augmentation

Data augmentation approaches in machine learning enhance the quantity of data by minimally modifying previously established data and adding fresh information points, or by creating synthetic data from an already existing dataset.

Adding more data

Adding extra data may usually assist machine learning models in finding the “true” pattern of the model, generalise better, and avoid overfitting. This is not always the case, since adding extra data that is erroneous or has a large number of missing variables might lead to even poorer outcomes.

Feature selection

Every model has different characteristics or features based on the number of layers, neurons, and other attributes. Many redundant features can be detected by the model, resulting in unnecessary complexity. So, the more sophisticated the model is, the more likely it is to overfit.

Ensembling

Combining techniques combine predictions from many models. These approaches not only deal with overfitting, but they also aid in the solution of complicated machine learning issues (such as merging images obtained from various perspectives into an overall view of the surroundings). Boosting and bagging are the most prevalent ensemble procedures.

-

- The boosting approach involves sequentially training a large number of weak learners (restricted models), with each sequence learning from the mistakes of the preceding series. Furthering the procedure, all of the weak learners are combined into a single strong learner.

- Another approach for reducing overfitting is bagging. It trains an extensive amount of strong learners (unconstrained models) and then integrates them together to improve prediction accuracy.

Ways to Avoid Underfitting

It is all about boosting labels and process intricacy while striving to produce bigger and more complete outcomes while reducing underfitting. The following methods can help professionals avoid underfitting:

Adjusting regularisation parameters

Regularisation settings are included by default in the algorithms you choose to prevent overfitting. As a result, they can occasionally prevent the algorithm from learning. When attempting to decrease underfit, minor tweaks to their settings are typically helpful.

Adding more time for training

Early conclusion of training may result in underfitting. To improve outcomes, users can boost the amount of epochs or the time of training.

Increasing model complexity

The fundamental cause of underfitting models is a lack of model complexity in terms of data properties. For instance, you may have data with up to 100,000 rows and more than 30 parameters. If you train data using the Random Forest model and set max depth (the maximum depth of the tree) to a tiny amount, your model will almost certainly underfit. Training a more sophisticated model (in this case, one with a greater max depth value) will aid in the resolution of the underfitting problem.

Differences between Overfitting and Underfitting

Both overfitting and underfitting are the basic reasons for poor performance in a machine-learning model. The differences between overfitting and underfitting are as follows:

-

- The model in overfitting has high variance. Whereas the model in underfitting has a high bias.

- The training data size is inadequate, and the model trains for numerous epochs on the little training data. In underfitting, since the model has not adapted training data patterns it cannot generalise new data effectively.

- When overfitting happens, several neural layers are grouped together in the model’s design. Deep neural networks are complicated and thus take a long time to train, and frequently result in overfitting the training set. In the case of underfitting, the machine learning model becomes too simple. For instance, training a linear model in complex scenarios.

- In case of overfitting, incorrect hyperparameter tuning during the training phase results in over-observation of the training set, leading to feature memorisation. Whereas hyperparameters that are incorrect and tuned frequently result in underfitting owing to under-observation of the characteristics.

What is a Good Fit in Machine Learning?

A good fit model is one that is well-balanced and devoid of underfitting and overfitting. This fantastic model works well throughout testing and has a good accuracy score during training. Users can examine the performance of a machine learning model with training data over time to find the best-fit model. The model’s error on the training data diminishes as the algorithm learns, as does the model’s error on the test dataset. However, if the model is trained for too long, it may accumulate superfluous information and noise in the training set, resulting in overfitting. To achieve a good fit, one must stop training when the error increases.

To achieve a good fit in a machine learning model, professionals can apply these techniques:

-

- Achieving a good fit in ML starts with choosing a validation dataset which is a subset of data extracted from training your model that is used to fine-tune the model’s hyperparameters. When choosing between final models, it assesses the performance of the final—tuned—model.

- Resampling is a repeated sampling approach in which you can take various samples from the entire dataset every repetition. The model is trained on these subgroups to determine the model’s consistency across different samples. Resampling strategies increase confidence that the model will perform optimally regardless of the sample used to train the model.

Generalisation in Machine Learning

In machine learning, generalisation is used to assess the model’s ability to identify previously unknown data sets. If a model can foresee data samples from diverse sets, it is considered to generalise effectively. The learning method must be exposed to diverse subsets of data for the model to generalise. However, there are certain limitations to generalisations which are as follows:

-

- The model’s behind-the-scenes learning mechanism influences how successfully knowledge is generalised.

- The crux of the model is the data samples that have been put into the algorithm for the system to learn.

Key Terms Associated with Machine Learning

Test error

Predictive models determine the relationship between the input variable and the desired value. When the model is tested on an unknown dataset, the resulting error is referred to as a test error.

Variance

Variance quantifies the inconsistency of distinct predictions over a diverse dataset.

Bias

Bias computes the difference between the forecast of the model and the goal value. If the model is oversimplified, the projected value will be distant from the true value, resulting in further bias.

Training error

The predictive model’s fitness is measured by comparing the actual and anticipated values. When this is executed on the training data, users get training errors.

Thus, overfitting and underfitting are two important concepts in machine learning that are connected to bias-variance decisions. To avoid these from happening, professionals can apply various techniques, which will help them achieve a good fit. If you’re interested in learning about the different concepts of machine learning then consider doing the Online MCA Program at Jaipur Manipal University. This two-year program will expose you to various job opportunities as a data scientist, software consultant, business analyst and more.