Discover Complete Guide to Reinforcement Learning in AI

Table Of Content

- What is Reinforcement Learning?

- Importance of Reinforcement Learning

- What is AI Reinforcement Learning?

- Value Functions of Reinforcement Learning

What is Reinforcement Learning?

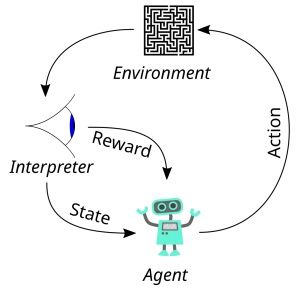

In Reinforcement Learning, an agent learns through making mistakes, how to make choices that result in the best results through making mistakes. The agent interacts with the environment and learns from its interactions by being rewarded or punished. The agent makes use of this feedback to discover a policy that optimises its long-term cumulative reward.

Applications like driverless vehicles, recommendation systems, and financial trading have demonstrated this strategy’s effectiveness.

Importance of Reinforcement Learning

What is AI Reinforcement Learning?

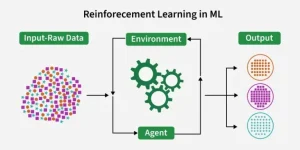

AI reinforcement learning is a part of artificial intelligence that allows systems to learn the best actions through trial and error, using rewards and penalties as guidance. Unlike traditional learning methods that depend on labeled data, AI reinforcement learning emphasizes ongoing interaction with the environment. This lets machines respond dynamically to new situations.

Its significance comes from how it reflects human learning. By experimenting, failing, and improving, it proves especially effective for complex, real-world tasks like robotics, self-driving cars, financial modeling, and personalized recommendations.

The advantages of AI reinforcement learning include its ability to manage uncertain and changing environments, uncover strategies beyond human intuition, and focus on long-term improvement instead of short-term results. It thrives today thanks to the combination of big data, faster computing power, and sophisticated neural networks. These advances enable reinforcement algorithms to handle large feedback loops and enhance decision-making on a wide scale. In short, AI reinforcement helps organizations build smart systems that learn, adapt, and grow like humans do.

Value Functions of Reinforcement Learning

Reinforcement learning, a sort of machine learning used to educate an agent on how to make decisions in an environment, is a key notion in value functions. Value functions are used to calculate the worth of being in a specific condition or doing a specific action, assisting the agent in making choices that will produce the best long-term results.

State-value functions and action-value functions are the two categories of value functions. While action-value functions calculate the value of performing a specific action in a specific state, state-value functions calculate the value of being in a specific state.

Finding the values of these functions for all potential states and actions, then picking the one with the highest value, yields the best course of action. Finding the best potential policy for the agent is done through a method known as value iteration.

In simple words, value functions are a potent tool in reinforcement learning that aids agents in learning how to respond best in complex settings and make informed judgments.

What is Deep Reinforcement Learning and Why to Use it?

An agent can learn through erroneous interactions with the environment using deep reinforcement learning (DRL), a kind of machine learning that blends deep neural networks and reinforcement learning. In DRL, the objective is to learn a policy that maximises the predicted cumulative reward over time. The agent receives a reward signal for actions that result in desired outcomes.

The Deep Q-Network (DQN), a well-liked DRL technique, makes use of a deep neural network to approximate the Q-value function, which stands for the predicted cumulative reward for performing a specific action in a specific state. The DQN technique employs a target network to stabilise the learning process and experience replay to sample previous experiences and learn from them.

Proximal Policy Optimization (PPO), a well-liked DRL technique, uses a gradient ascent method to optimise the policy function directly. Robotics, video games, and natural language processing are just a few of the tasks in which PPO has demonstrated excellent performance. PPO employs a trimmed surrogate target for steady learning and to avoid major policy modifications.

DRL has accomplished complex tasks that were previously thought to be insurmountable, such as playing machine games and becoming an expert at the game of Go, with astonishing success. DRL has also been used to solve practical issues, such as regulating autonomous vehicles and reducing building energy use.

Final Words

To sum up, reinforcement learning is a potent machine learning technique that allows an agent to learn from mistakes and experiences. It has uses in a variety of industries, including resource management, gaming, and robotics. To explore more on reinforcement learning, you can consider taking up the advanced data science certificate program from IITM Pravartak Technology innovation Hub of IIT Madras.

Frequently Asked Questions

Find a Program made just for YOU

We'll help you find the right fit for your solution. Let's get you connected with the perfect solution.

Is Your Upskilling Effort worth it?

Are Your Skills Meeting Job Demands?

Experience Lifelong Learning and Connect with Like-minded Professionals