Fuel your Gap!

Get instant skill assessment with

the Exclusive Skill Gap Calculator

Fuel your Gap!

Get instant skill assessment with

the Exclusive Skill Gap Calculator

15+ Years In the Industry

+

Number of Alumni

+

World’s Leading

Academic Partners

+

Programs from

Diverse Domains

+

Corporate Learning

Centres across India

Our Courses

- All Programs

- Doctorate & PhD

- Online MBA

- Online PG

- Online UG

- General Management & Leadership

- Strategy

- Analytics & Data Science

- Digital Marketing & Analytics

- Finance & Banking

- Supply Chain & Operations

- Healthcare Management

- Human Resource

- Product Management

- Cybersecurity & Cloud Computing

- Technology & Analytics

- All Programs

- Doctorate & PhD

- Online MBA

- Online PG

- Online UG

- General Management & Leadership

- Strategy

- Analytics & Data Science

- Digital Marketing & Analytics

- Finance & Banking

- Supply Chain & Operations

- Healthcare Management

- Human Resource

- Product Management

- Cybersecurity & Cloud Computing

- Technology & Analytics

Admission Closed

Advanced Programme in EV Technology – CEP, IIT Delhi

- Duration: 6 Month

- Application Closure Date: 30th June 2025

Admission Open

Post Graduate Certificate in Digital Marketing & Growth – IIM Visakhapatnam

- Duration: 12 months

- Application Closure Date: Closing Soon

Admission Open

Executive Programme in Brand Management – IIT Delhi

- Duration: 6 Months

- Application Closure Date: 25th April 2025

Admission Open

Advanced Certification Course in Sensor Technologies and Chip Design – IISC

- Duration: 5 months

- Application Closure Date: Closing Soon

Admission Closed

Global Online Certification Course on Reinventing Business Operations with Data Analytics – IIM Mumbai

- Duration: 6 Weeks

- Application Closure Date: Closed

Admission Closed

Executive Programme in Artificial Intelligence and Cyber Security for Organizations [EPAI&CSO] – IIM Indore

- Duration: 10 Months

- Application Closure Date: 30th June 2025

Admission Open

Professional Certificate Programme in Strategic Management – IIM Kozhikode

- Duration: 1 Year

-

Application Closure Date:

Batch 06: 15th June 2025

Batch 07: 30th June 2025

Admission Closed

Certificate Programme in Digital VLSI Design Programme by CEP, IIT Delhi

- Duration: 6 Months

- Application Closure Date: 13th July 2025

Our Courses

- All Programs

- Doctorate & PhD

- Online MBA

- Online PG

- Online UG

- General Management & Leadership

- Strategy

- Analytics & Data Science

- Digital Marketing & Analytics

- Finance & Banking

- Supply Chain & Operations

- Healthcare Management

- Human Resource

- Product Management

- Cybersecurity & Cloud Computing

- Technology & Analytics

- All Programs

- Doctorate & PhD

- Online MBA

- Online PG

- Online UG

- General Management & Leadership

- Strategy

- Analytics & Data Science

- Digital Marketing & Analytics

- Finance & Banking

- Supply Chain & Operations

- Healthcare Management

- Human Resource

- Product Management

- Cybersecurity & Cloud Computing

- Technology & Analytics

Admission Closed

Advanced Programme in EV Technology – CEP, IIT Delhi

- Duration: 6 Month

- Application Closure Date: 30th June 2025

Admission Open

Post Graduate Certificate in Digital Marketing & Growth – IIM Visakhapatnam

- Duration: 12 months

- Application Closure Date: Closing Soon

Admission Open

Executive Programme in Brand Management – IIT Delhi

- Duration: 6 Months

- Application Closure Date: 25th April 2025

Admission Open

Advanced Certification Course in Sensor Technologies and Chip Design – IISC

- Duration: 5 months

- Application Closure Date: Closing Soon

Admission Closed

Global Online Certification Course on Reinventing Business Operations with Data Analytics – IIM Mumbai

- Duration: 6 Weeks

- Application Closure Date: Closed

Admission Closed

Executive Programme in Artificial Intelligence and Cyber Security for Organizations [EPAI&CSO] – IIM Indore

- Duration: 10 Months

- Application Closure Date: 30th June 2025

Admission Open

Online MBA – Jain University

- Duration: 2 Years

- Application Closure Date: Closing Soon

Admission Open

Professional Certificate Programme in Strategic Management – IIM Kozhikode

- Duration: 1 Year

-

Application Closure Date:

Batch 06: 15th June 2025

Batch 07: 30th June 2025

Industry Oriented Free Online Courses

and upskill your workforce

350,000+

Career Transformed

2,000+

IIM Alums

1,000+

Corporate Association

256%*

Average Salary Hike Opportunities

Success Stories

Our Alumni Work For

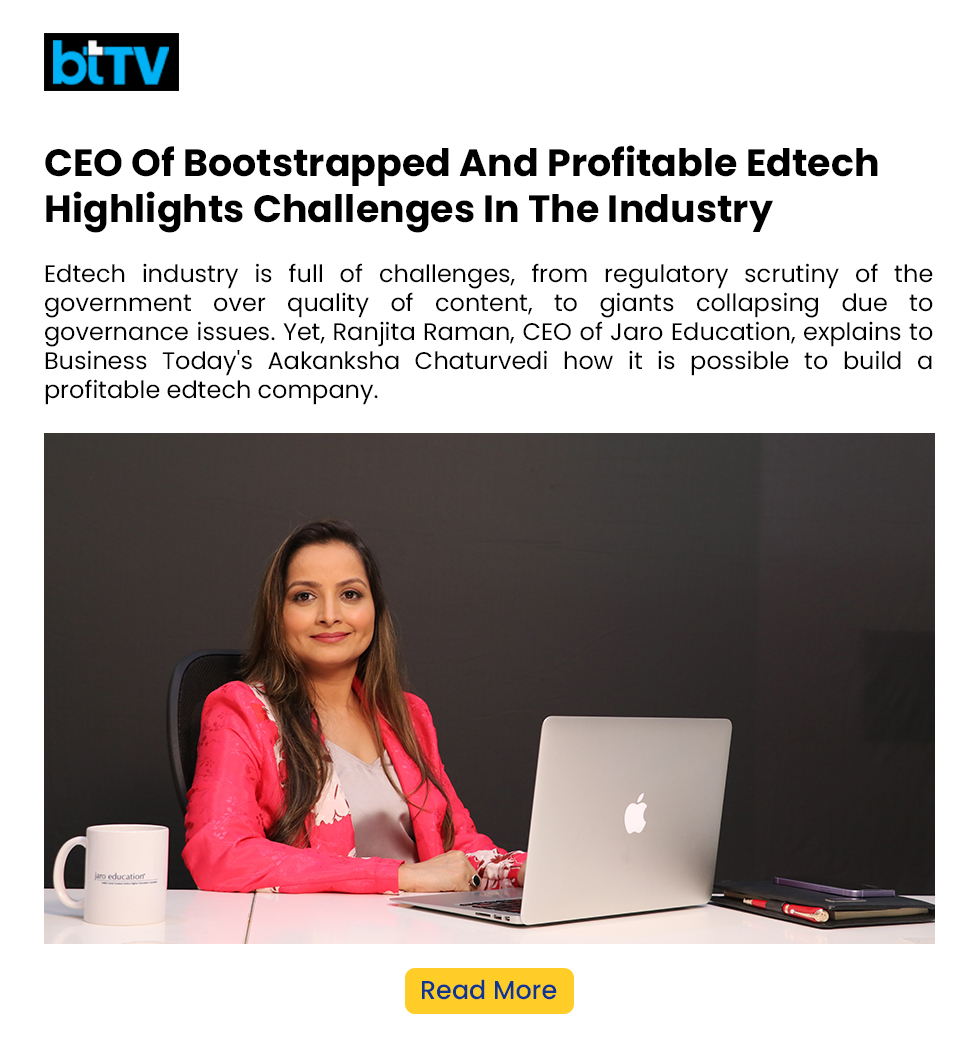

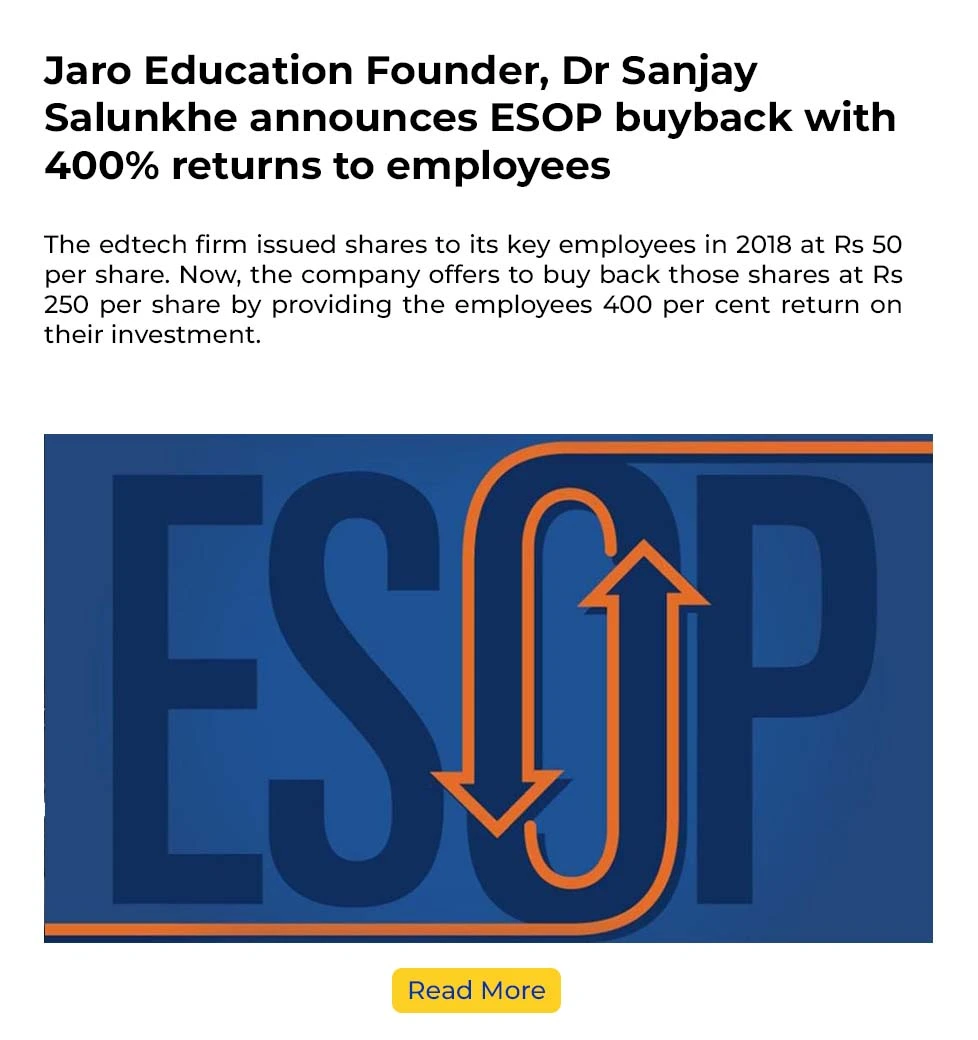

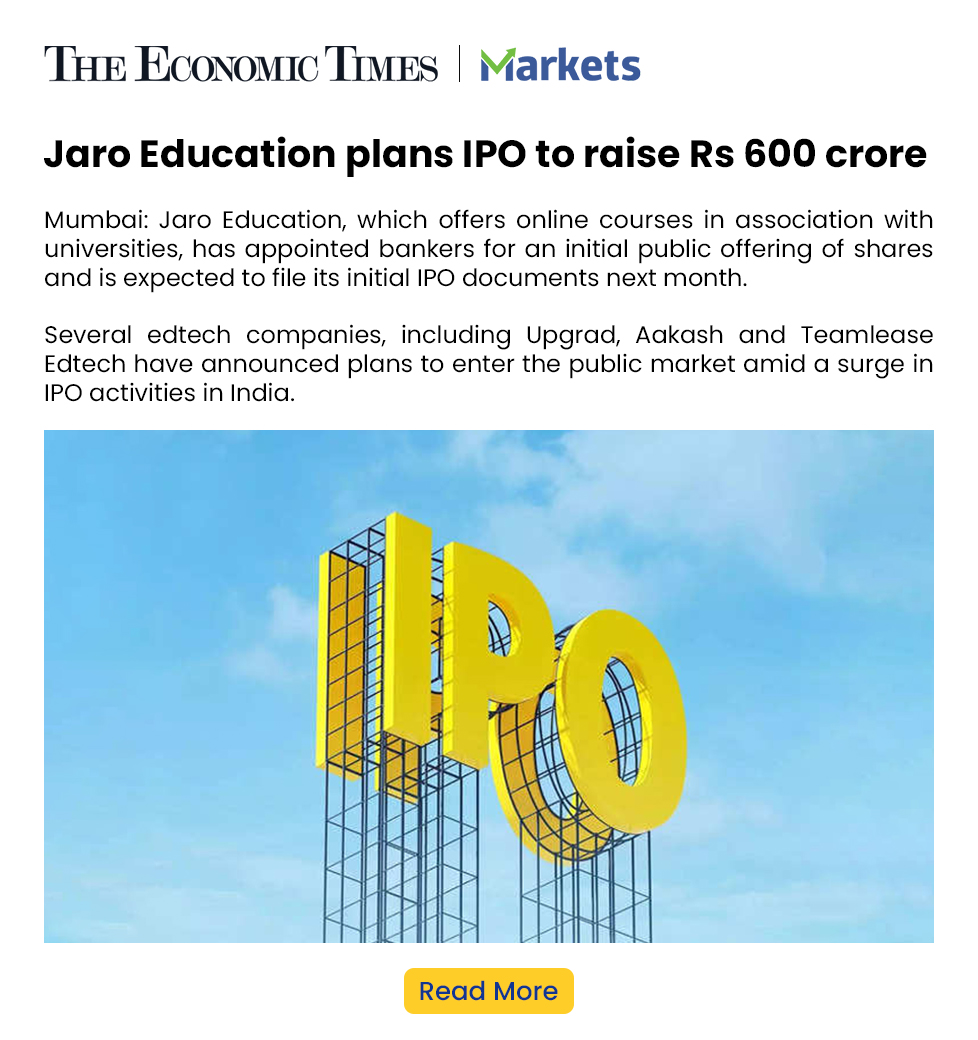

Jaro In Media

Awards & accolades

2012

Most Innovative & Successful Online MBA Program in India

2017

Outstanding Contribution in Online Education

2018

Best Digital Learning Innovation Award

2019

The Best ICT Enable Higher Education Institute Award

2022

National Best Employer Award

2022

Edtech Leadership Award

2023

India’s Most Trusted Online Higher Education Company

2024

Times Business Awards North

Find Jaro in your city

Get In Touch

Learners Support

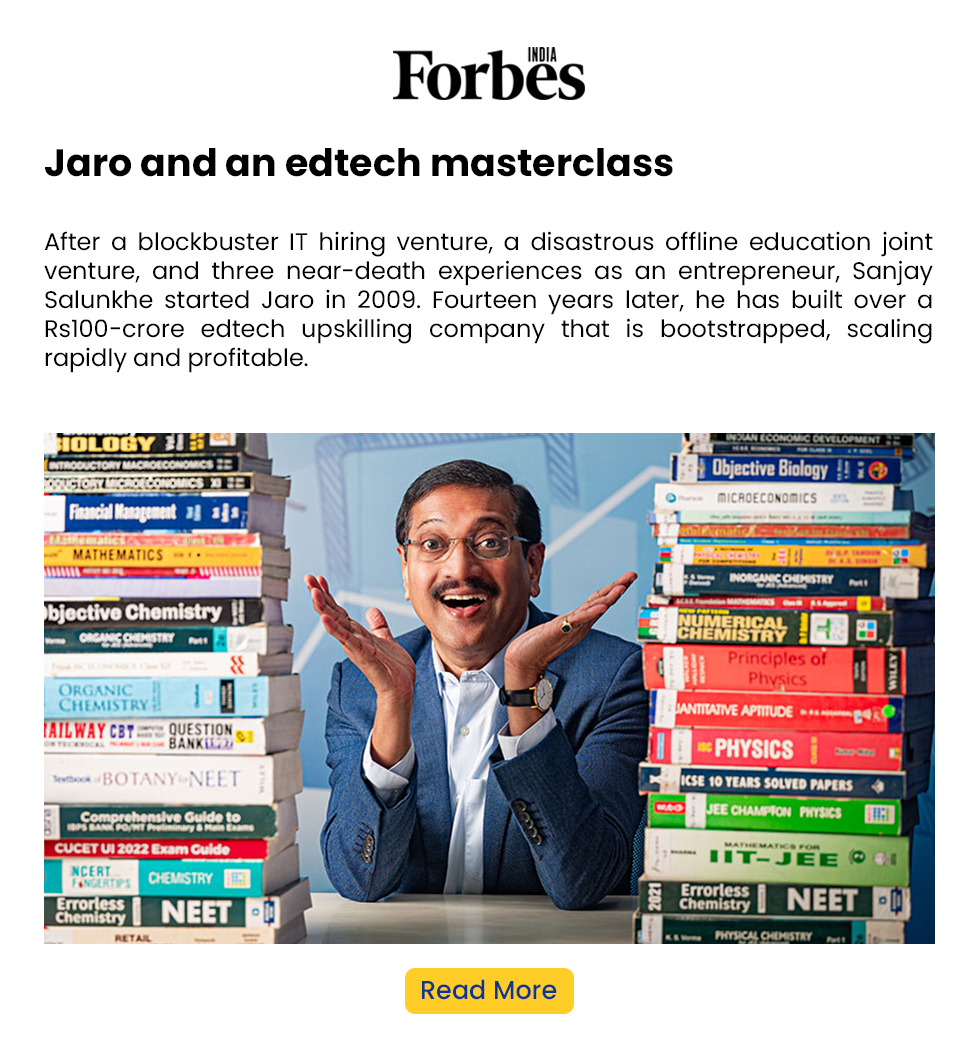

Dr. Sanjay Salunkhe is a first-generation entrepreneur with over three decades of experience in education, human resources consulting, and banking in India and around the world. He is regarded as one of India’s most respected and influential veterans in the online education space.

Dr. Salunkhe began his entrepreneurial journey in 1999 when he founded Net Technologies, a recruitment firm that rose to become one of India’s top ten executive search firms. He founded Jaro Education in 2009, an Edtech firm that collaborates with top universities and institutes to provide management, technology and techno-functional programmes.

Paving new avenues in the educational sector, Jaro Education encourages long-term relationships with its partners based on mutual respect, dependability, and trust. Jaro Education facilitates the development of management and technology-based online programmes for many leading Indian and global institutes like IIM Ahmedabad, IIM Kozhikode, IIT Delhi, Rotman School of Management (University of Toronto), Swiss School of Management, Wharton Interactive, IIT Madras Pravartak and others.

Jaro Education strives to redefine the skill sets of 21st-century professionals to pave the way for their career transition, success, and much more. Adhering to fast-paced professionals’ needs, Jaro Education offers value for money and a quality bucket of online executive education programs catering to short and long duration(s) certifications, degrees, and doctorate programs from the globe’s finest IVY-Leagues, IIM’s, IIT’s, and top NIRF-ranked universities and institutions across varied domains such as management, technology, analytics, design thinking, project management, leadership, finance, etc., right from early age to C-Suite professionals.

Jaro Education is a committed Edtech player who inspires the workforce to innovate breakthroughs.

At Jaro Education, we believe in linking talent to value. We aim to build a committed workforce that thrives on quality deliverables. Our core values include Trust, Innovation, Growth Oriented, Commitment, Integrity.

Yes, Jaro Education has a dedicated B2B team that caters to the need of corporate learning & development. Jaro Education’s enterprise offerings will help your employees upskill seamlessly through top academicians & industry experts.

If you would like to enquire, you can contact us on: Contact Number – +91-7208935225 Mail ID – puja.m@jaro.in